Symbiotic machines

Do we have to understand to create?

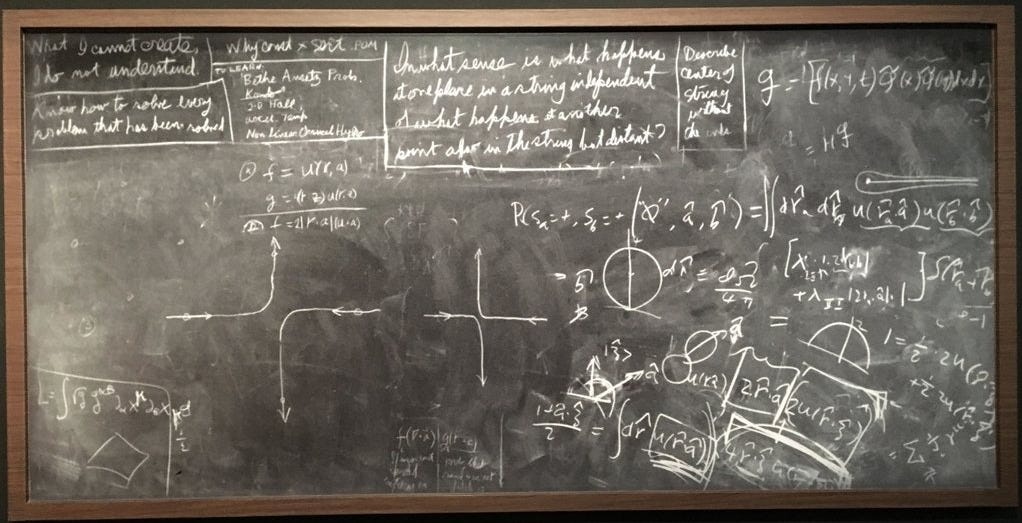

Synthetic biologists have been haunted by a message left behind by Richard Feynman when he died. On the corner of the blackboard in his office at Caltech he had written: “what I cannot create, I do not understand.”

This phrase has been a rallying cry for synthetic biology, driving many of the early principles of the field—in particular the focus on rational design of new biological systems from fully characterized and deeply understood elements. For synthetic biologists, the goal was to do “real” engineering, to validate our understanding of the details of biological systems through the crucible of trying to build them up again from modular parts.

But of course there is some irony in using a quote from a theoretical quantum physicist as a foundation of an engineering mindset. Feynman was also quoted as saying “I think I can safely say that nobody understands quantum mechanics.” Perhaps it is worth thinking more deeply about the relationship between understanding and creating.

With biology, we create many things we do not fully understand. From the earliest biotechnologies of domestication, breeding, and fermentation, there have been many profound things humans were able to create with biology, millennia before ever knowing that a cell or DNA even exists. Today, synthetic biologists can synthesize whole genomes, like the bacterial genome built in 2010 by the J. Craig Venter Institute (which included in its sequence a misquote of Feynman’s phrase—“What I cannot build, I cannot understand”) with many hundreds of genes, a significant percentage of which were and remain of completely unknown function.

Biology offers a completely different paradigm for (not-real?) engineering, one that doesn’t stress the unknowns but takes advantage of the fact that living things just work. Alongside genetic engineering and synthetic biology, there has been a parallel world of bio-enabled engineers building biohybrid robots, which use living organisms’ ability to sense and respond to their environment as the computational basis for the function of mechanical robots. Many organisms like fungi and insects can sense light and dark, and have been used as the computational center of light-responsive robots.

Mishra et. al. (2024) Science Robotics.“Sensorimotor control of robots mediated by electrophysiological measurements of fungal mycelia”

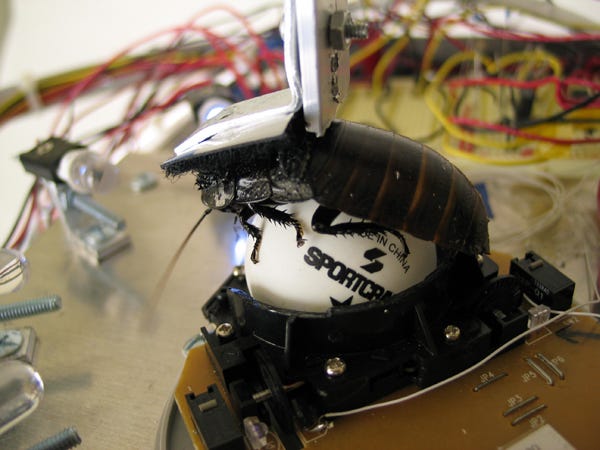

Writing on Garnet Hertz’s Cockroach Robot, which scurries away from light as the cockroach scurries over the track ball it is velcroed onto, the historian Andrew Pickering details how this cybernetic approach to engineering—“biological computing”—diverges from the “real” engineering approach that synthetic biologists sought to build into biology.

We can note that centuries of engineering and science go into the manufacture of valves, chips and electronic circuits in general. The human race has had to accumulate an enormous amount of material infrastructure, knowledge and expertise to put us in a position to build and program computers. Hertz instead needed to know almost nothing about roaches to build his machine. The roach remains, in a cybernetic phrase, a black box, known only in terms of its performative inputs and outputs—it runs away from light, and that’s it. If the construction of a conventional robot entails ripping matter apart and reassembling it in accordance with our designs, Hertz’s involves a different strategy: the entrainment of the agency of nature, intact into a human project, without penetrating it by knowledge and reforming it.

In relation to Hertz’ biological computing, the long and arduous conventional history of science and technology, industry and warfare, leading up to mainstream contemporary robotics looks like a massive detour—penetrating, knowing and rearranging matter at vast expense of money and effort. Hertz’ robots show that there is another and much simpler way to achieve comparable ends without the detour. We can see two different stances towards matter in play here: the conventional one that involves penetrating black boxes through knowledge, and the cybernetic one that seeks to entrain boxes that remain black into our world.

There is a rich history in art and science of these sorts of symbiotic cybernetic machines, driven by the needs and wants of cells, organisms, and brains, to act in ways both functional and symbolic. Even when the goals are practical, the outcomes are poetic—“robots moved by slime mould’s fears.”

Today, artificial intelligence is blurring the boundary between these two paradigms. The contemporary pinnacle of the technological prowess and understanding needed to build and program computer chips, designed to mimic what we understand of the ways neural networks connect in biological brains now leads to the creation of behaviors that we cannot understand. Researchers seek interpretability, studying the outputs of generative models as if they are natural black box systems. And more recently, researchers are building new kinds of chips for artificial actual intelligence, hybridizing silicon with living neurons, a white box that takes advantage of how living cells learn.

At the same time, the unknowable and unpredictable generativity of machine learning systems is core to their value and potential as a new technological paradigm. James Bridle’s fantastic book Ways of Being explores the histories, entanglements, and potential future of multiple intelligences. They propose a vision for better, more ecological machines, which contribute to the flourishing of communities of humans and non-humans. One of the core conditions of such a vision is unknowing, which “means acknowledging the limitations of what we can know at all, and treating with respect those aspects of the world which are beyond our ken, rather than seeking to ignore or erase them”:

To exist in a state of unknowing is not to give in to helplessness. Rather, it demands a kind of trust in ourselves and in the world to be able to function in a complex, ever-shifting landscape over which we do not, and cannot, have control… Many of our most advanced contemporary technologies are already tuned towards unknowing, none more so than machine-learning programmes, which are specifically designed for situations which are not accounted for in their existing experience. Applications such as self driving cars, robotics, language translation, and even scientific research – the generation of knowledge itself – are all moving towards machine-learning approaches precisely because of this realization that the appropriate response to new stimuli and phenomena cannot be pre-programmed. Nonetheless, such programmes can all too easily continue to ignore or erase actual reality – with devastating consequences – if they perceive themselves in the same way that we, their creators, have always seen ourselves: as experts, authorities and masters. To be unknowing requires such systems to be in constant dialogue with the rest of the world, and to be prepared, as the best science has always been, to revise and rewrite themselves based on their errors.

Many people are excited about the possibilities of AI to finally give us a handle on the complexity of biology, to finally be able to truly understand enough to create and control biology. But what if instead it helps us to end the detour through our modern deterministic view of biology and engineering? What if biological engineering ends up looking a lot different than what we have come to think of as “real” engineering?

Stafford Beer, the 1960s operations theorist who exemplified the cybernetic view in Pickering’s history, sought out the generative function of lively materials rather the top down programming and control of “inert lumps of matter” according to a blueprint.

As a constructor of machines man has become accustomed to regard his materials as inert lumps of matter which have to be fashioned and assembled to make a useful system. He does not normally think first of materials as having an intrinsically high variety which has to be constrained. . . [But] We do not want a lot of bits and pieces which we have got to put together. Because once we settle for [that], we have got to have a blueprint. We have got to design the damn thing; and that is just what we do not want to do.

Biotechnology was born during the ascendence of the “real engineering” paradigm. We have been taught to think of the genome as the “blueprint” or “program” determining the functions of living things. But other metaphors are possible. In a recent preprint, Benedikt Hartl and Michael Levin explore research exploring the genome not as a program but as an LLM:

The new ideas being developed try to go beyond ‘blueprint’ or ‘program’ metaphors and instead identify the genome as compressed latent variables…that instantiate organismal development literally as a generative model. It is hypothesized that the genome comprises compressed latent variables that are shaped or encoded by evolution and natural selection, and decoded by a generative model implemented by the cells of the developing embryo that is strikingly similar to the way information is processed in biological and engineered cognitive systems. Development can thus be interpreted as hierarchical generative decoding process from a single cell into a mature organism that is similar but not identical to its ancestors, a reconstruction with variational adaptations and mutations, but also dynamic and flexible interpretation of past information as suitable for new contexts.

What could be possible with a generative synthetic biology?

This could be a pretty important conceptual leap to stop thinking of a static blueprint or even a set of letters to a full on generative variational autoencoder. À metaphor with a much more flexible texture - an expansive, emergent system that just encodes endless possibilities

I explored a similar thought space recently through the lens of scientific methodology and appreciated reading your take. In addition to being a detour, I think we are approaching dead ends through this approach. Maybe it was the best we could do with the tools we had. But now we have different tools, and it’s time for new approaches.