Hundreds of daily decisions

Part 2 in a collaborative series on taste and science

Oscillator and The Hard Thing are blogs by people who have spent many years thinking together about the applications and implications of new technologies in the business of biology. We’re teaming up for a series of posts about AI, biotech, and the ineffable magic of taste.

A fun thing to do with young kids (or students in an intro to CS class) is to ask them to explain how to do simple tasks in the excruciating detail required to actually do them.

How do you make a peanut butter sandwich? Well…put the peanut butter on the bread!

No! Not like that!! Ok fine… Take out two slices of bread, open the peanut butter jar, and put a scoop of peanut butter on each of the two slices of bread.

What’s wrong with you?!?! Use a knife to get the peanut butter out and then spread it all over each slice of bread.

*sigh….* No… you open the jar first…. I give up!

Ok you get the idea.

What’s intuitive and obvious to us, as experts, is hard to proactively describe, even though it is trivial to spot the errors when they occur. Even worse, every step we might write in a protocol opens up many more questions. Is the peanut butter in the pantry or did I leave it out in the dining room? Are the knives in the drawer or are they still in the dishwasher? Is the peanut butter warm enough to spread easily or do you have to adjust the pressure you use to spread? How much peanut butter do you like on your sandwich? How do you move the knife to get it spread evenly across all the bread? What is the right kind of bread? Oh no, is the bread moldy? And now the kids want mac and cheese anyway…!!!

When we actually go to do these things, with the know-how of practiced experience having made many peanut butter sandwiches, we don’t have to consciously think about every one of these decisions and dependencies. Because these choices and adjustments happen unconsciously, we might think that describing a plan of action is straightforward, but even the simplest actions and plans hold a surprising amount of detail.

The same is true in science and technology.

In the 1980’s, the anthropologist of technology Lucy Suchman was a researcher at Xerox PARC observing how people worked with photocopiers. Her research uncovered that people rarely followed the explicit rules and instructions in the manual. Instead, users would use ad hoc, situated reasoning to troubleshoot problems they encountered. She expanded from these observations to a bigger theory of plans vs. situated actions: while the cognitive scientists and engineers she worked with saw actions in terms of following plans and rules, social scientists like Suchman observed how humans acted through countless small decisions, contingent on the situations at hand.

In our previous post, we wrote about how these kinds of actions and choices matter for good science: from the highest level choice of the right problem to focus on down to the simplest flick of an eppendorf tube.

This kind of taste and tacit knowledge is taking on new importance in the age of AI, in two divergent ways:

First, as human expertise is being challenged by automation, we fight back by highlighting the depth and detail of the work that we do, and the richness of our experience that can not (yet) be automated.

At the same time, automation is indeed challenged by precisely that richness and complexity, and those who are working to automate various kinds of work are finding themselves increasingly focusing on making the tacit elements of human actions explicit to the training sets of machines.

Subjective experience and human craft are most often discussed in the domain of the arts, and it is in the debate over whether AI can create art that these arguments are most visible. In a piece for the New Yorker arguing that AI can never make art, the author Ted Chiang focuses on those real-time choices and the situated practice of crafting one word at a time, contrasting it with the automated processes that go on when you prompt an AI:

Art is notoriously hard to define, and so are the differences between good art and bad art. But let me offer a generalization: art is something that results from making a lot of choices. This might be easiest to explain if we use fiction writing as an example. When you are writing fiction, you are—consciously or unconsciously—making a choice about almost every word you type; to oversimplify, we can imagine that a ten-thousand-word short story requires something on the order of ten thousand choices. When you give a generative-A.I. program a prompt, you are making very few choices; if you supply a hundred-word prompt, you have made on the order of a hundred choices.

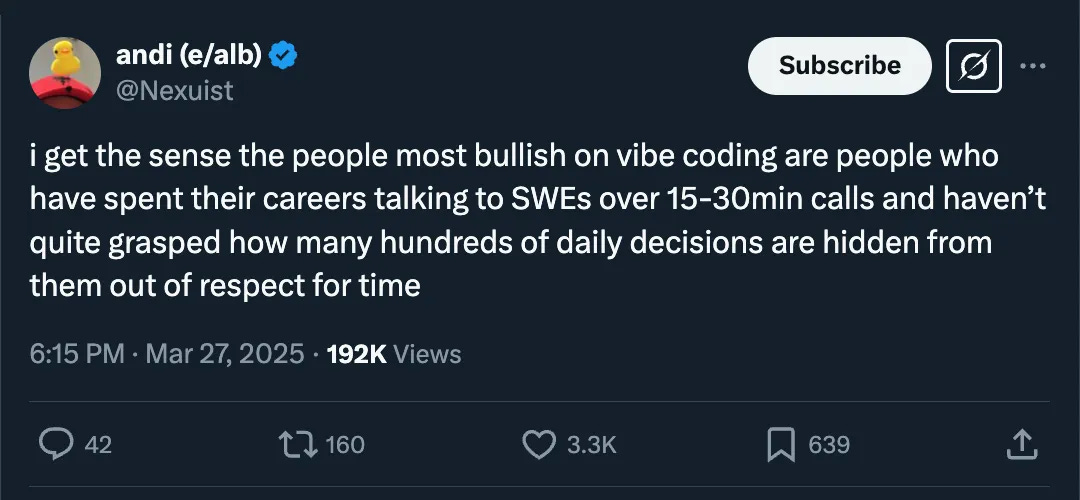

While this may seem most relevant in areas such as art and literature, it has been fascinating to see these same kinds of arguments emerge in the disciplines much more likely to see themselves as objective domains of pure technical skill and reasoning. A recent tweet from a software engineer pushing back against “vibe coding” highlights the sentiment well:

Whether it is the highly technical work of coding a piece of software or the artistic work of writing a short story, human effort is made up of a myriad of daily decisions. Work (whether artistic or technical) does not proceed according to a predetermined plan that workers simply execute, but by the situated actions of people in relation to their work, materials, coworkers, competitors, and customers—the real world.

Science works the same way. Observations, hypotheses, experimental design, measurement techniques, and data analysis have enormous degrees of freedom that scientists navigate with hundreds of daily decisions, guided by knowledge, experience, mentorship, intuition, and taste.

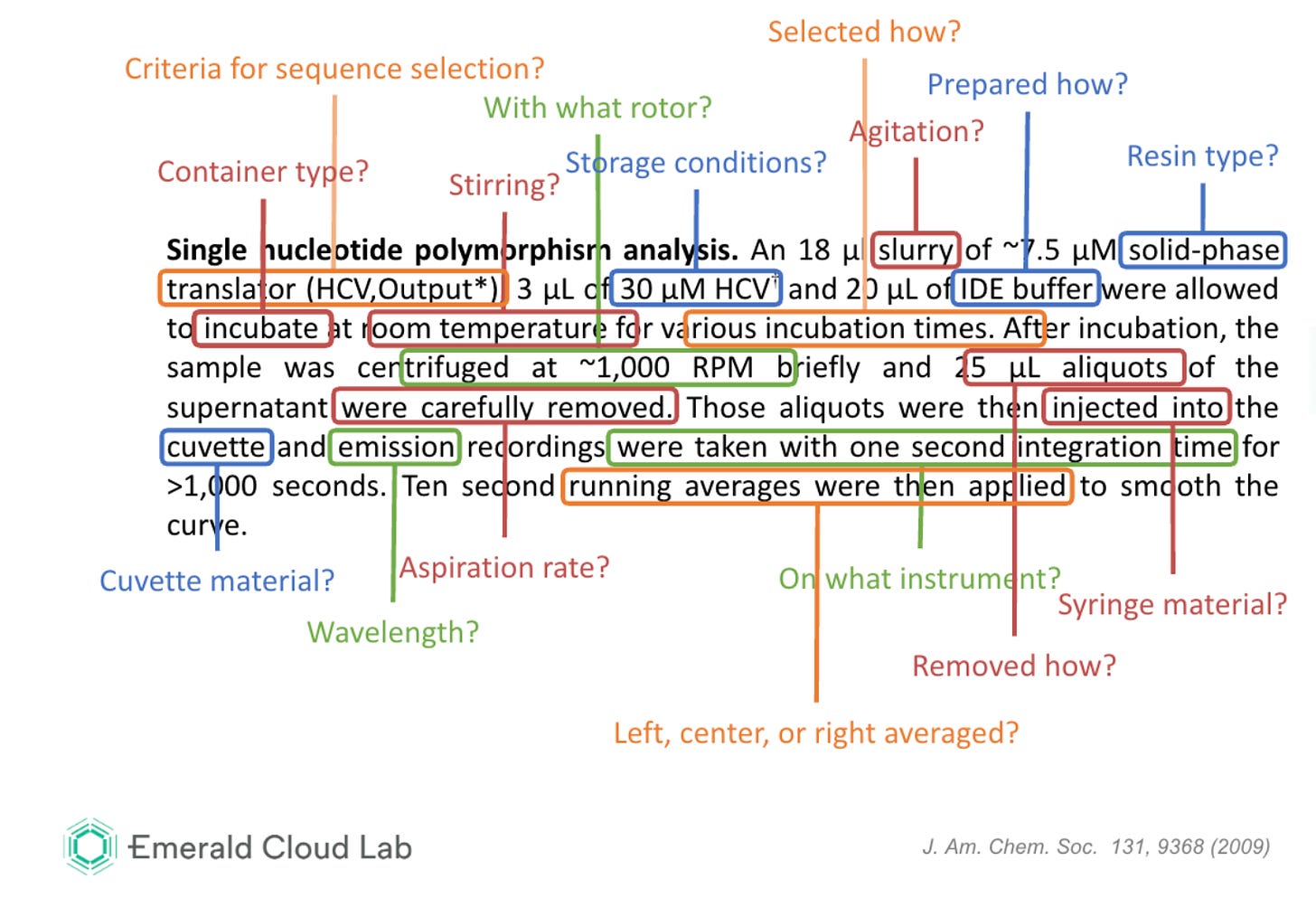

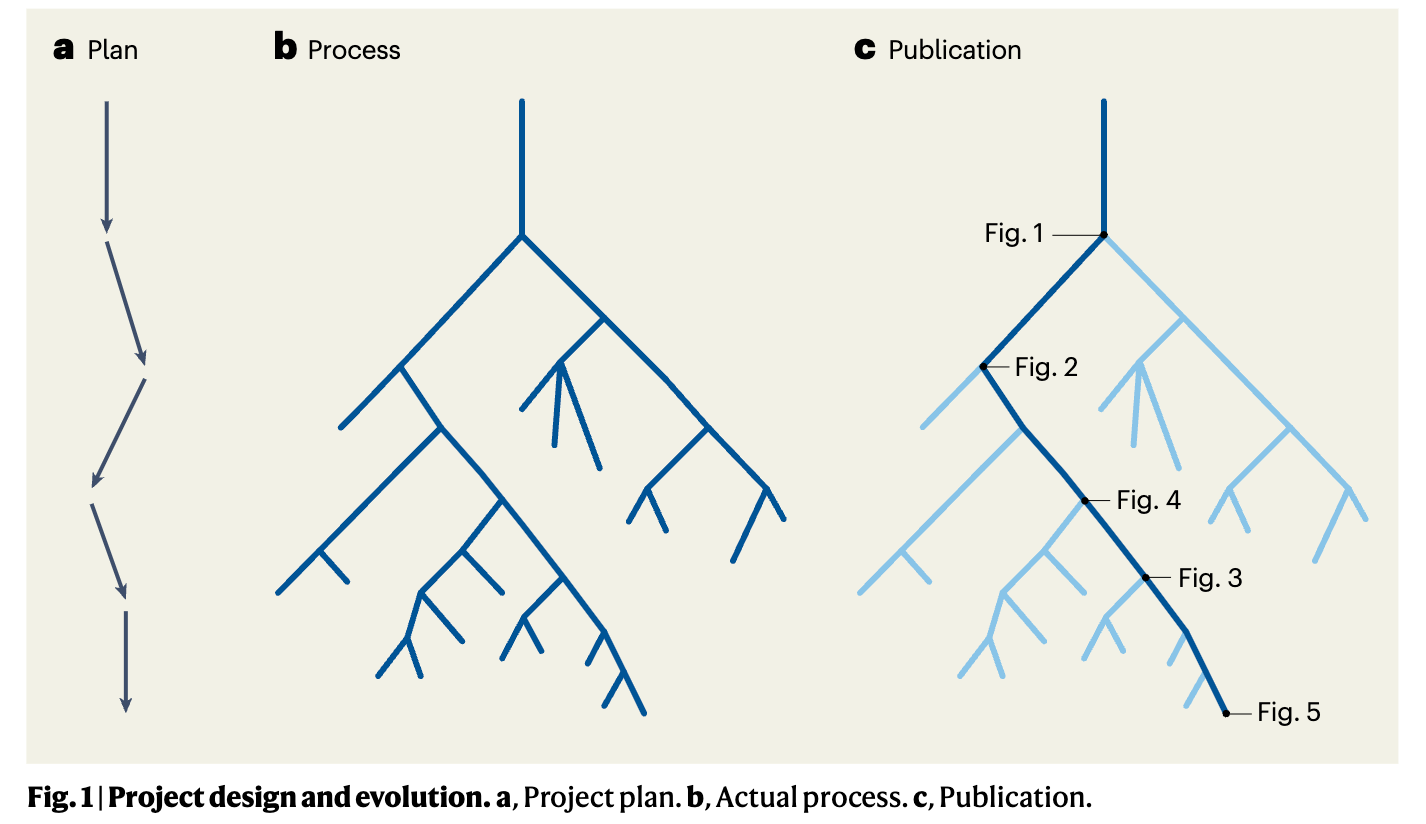

But, like the coding decisions hidden out of respect for time, the way we structure and communicate science rarely leaves room for explicitly defining these choices. A recent perspective by Itai Yanai and Martin J. Lercher on the need for open-endedness in science highlights the differences between our linear proposals, the actual process of exploring many paths and decisions in the lab, and the final paper that shows only the winning path. Publications describe the experiments that won, but almost never what it took to troubleshoot the right questions, the right protocol, the right type of cells, the proper flick of the test tube.

Why do we hide these daily decisions? And what are the consequences of keeping them hidden?

Harry Collins is a sociologist of science who for decades has studied the processes that physicists use to perform and communicate scientific work. How are findings made? Replicated? Trusted? In 2001, he published a study observing how, after 20 years, a group of physicists in Glasgow was able to finally replicate a surprising measurement made by a Russian research group that the quality factor (“Q”) of sapphire crystals was sensitive enough to be used in the instruments that would one day be used to detect gravitational waves.

Over the prior twenty years, no other group had been able to replicate this measurement. The breakthrough was enabled by the Scottish and Russian teams spending months together recreating the experimental setup. The Scottish team had to experience first-hand the meticulous and rigorous, yet extremely fussy and highly specific, choices that enabled the remarkable result. In his paper “Tacit Knowledge, Trust and the Q of Sapphire,” Collins offers a taxonomy of what keeps this knowledge tacit, including the “tricks of the trade” that we hold back on purpose so as not to help the competition, the details that seem superfluous and we cut out to fit into journal page count requirements, or the messy human details of our choices, challenges, mistakes, and dead-ends that we leave out to bow to scientific cultural norms.

It is precisely these norms—the cultural pressure to show scientific results as effortless and to show only the successful branches of our path to a result—that keep so much critical knowledge hidden and contribute directly to the challenge of replicating complex science. Collins recommends one simple trick to address the replication crisis in science: simply share just how hard it was to set up the experiment, how many times you had to try to get something right, and how long each attempt took in a simple sentence in the methods section. Not even knowing all the tacit details, but acknowledging how hard they were to come by helps us know how long to persist when trying to replicate it.

Collins’ closes his paper conceding that changing these cultural norms in science will not be easy, and indeed in the quarter century since it was published, the fact that making tacit knowledge more visible could increase trust, replicability, tech transfer, and ultimately the impact of science has barely moved the needle on scientific culture. Tacit knowledge and the hundreds of daily decisions a scientist makes are still rarely talked about, though that’s finally starting to change a bit thanks to the threat of AI—whether it is about fears of automating risky misuse of science and creating bioweapons (too hard, because of tacit knowledge), or about fears of automating the job of science away from humans (too hard, because of tacit knowledge, among other things).

What might be possible if we truly take tacit knowledge seriously and reframe the relationship between AI and the taste needed to make something in the real world?

Anicka Yi is a visionary artist, who creates immersive experiences leveraging multimedia displays, advanced technologies, and even living organisms. Grieving the death of her sister, Yi began to ask what would happen to her art after her own death. Would it be possible to train an AI as a “digital twin” of her creative practice, to continue creating her work even after her passing?

Speaking on the Artwrld podcast, Yi describes how this idea got her started thinking about her own studio as a living, creative organism, and how she might study it:

[W]hat is the creative gene expression of the studio? How do I understand this? So running a research studio, we started to research how we could start to have a forensic analysis of how we make art. We started to research and record a lot of our meetings and all these different textures and different traces of how artworks come together…and start to develop an algorithm to perhaps create a digital twin of the studio…This digital twin could be an evolving entity so that it could function as a facilitator, an archivist, a storyteller, a production assistant…and generate new artworks even in my absence.

For Yi, this quest felt both like training an artistic apprentice (albeit a disembodied one) and also like the explorations of a biologist, studying the dynamic living organism that is her studio.

An apprentice learns through interactive mimicry and experimentation in a context where tacit knowledge can be directly transferred in the moment. What are the right choices, the right movements, the right moments to stop? They see each of those decisions in practice and their own attempts are corrected in the moment, until they achieve mastery.

A biologist learns by perturbing and measuring, reverse engineering the wiring of the individual parts and mechanisms that make up the whole. Just as plans fail to capture the richness of situated actions and research papers fail to capture the complexity of tacit knowledge, knowing the sequence of the genome is not enough (at least today) to predict the behavior of an organism in response to different conditions.

In these rich, complex, and tasteful domains of art and science, will AI be able to learn as much as a human apprentice? Will it learn more? Will it be able to pass that expertise along and teach others? What might be possible if it can?

This was an excellent two-part series, Christina and Anna-Marie! How much do you think the field would progress, if scientists (basic and clinical) were incentivized to publish on negative results as well? (maybe starting simply at the clinical trial stage - publish all results of all failed preclinical + clinical trials if a sponsor or regulator decide that a particular approach should no longer proceed)

I hear you on the importance of tacit knowledge in doing science, but I think it could be important to explore why it's like that. First, science publications are written by scientists for scientists and I think there's an expectation that the audience is skilled in the art, so lots of details are assumed. While the Methods section in theory should tell others exactly how do the experiment, I think in practice it's more like a general approach. But this doesn't mean it's impossible to convey all the little details and decisions. There are publications like Nature Protocols or even Journal of Visual Experiments that are incredibly detailed and really do enable someone new to an area to successfully execute a new protocol. But it's only a very small number of methods that have these extremely detailed protocols published.

Another thing that is relevant here is how much of science is an in person activity, not just doing the physical experiments, of course, but troubleshooting and communicating the results. The break room shared with the neighboring labs, the poster session, the questions after a conference presentation - I think probably more science is reported, interpreted and conceived here that in written form in journals. At a talk at a recent conference, a presenter acknowledged the complexity of the approach he presented on and offered to share reagents and detailed protocols to others who wanted to give it a try. I loved the aspirational methods section you shared from Harry Collins, a kind of internet v reality of science, but in reality today, just talking to any author can get you the same content. But of course you have to be willing and able to to make that connection. Interestingly, I was catching up with a professor friend recently and he was telling me how his current crop of students seem to really dislike talking about themselves and their work. Whether this is a pandemic legacy or a more broad generational thing, it's probably not good.