Vibe coding a genome

AI designed DNA is coming into focus

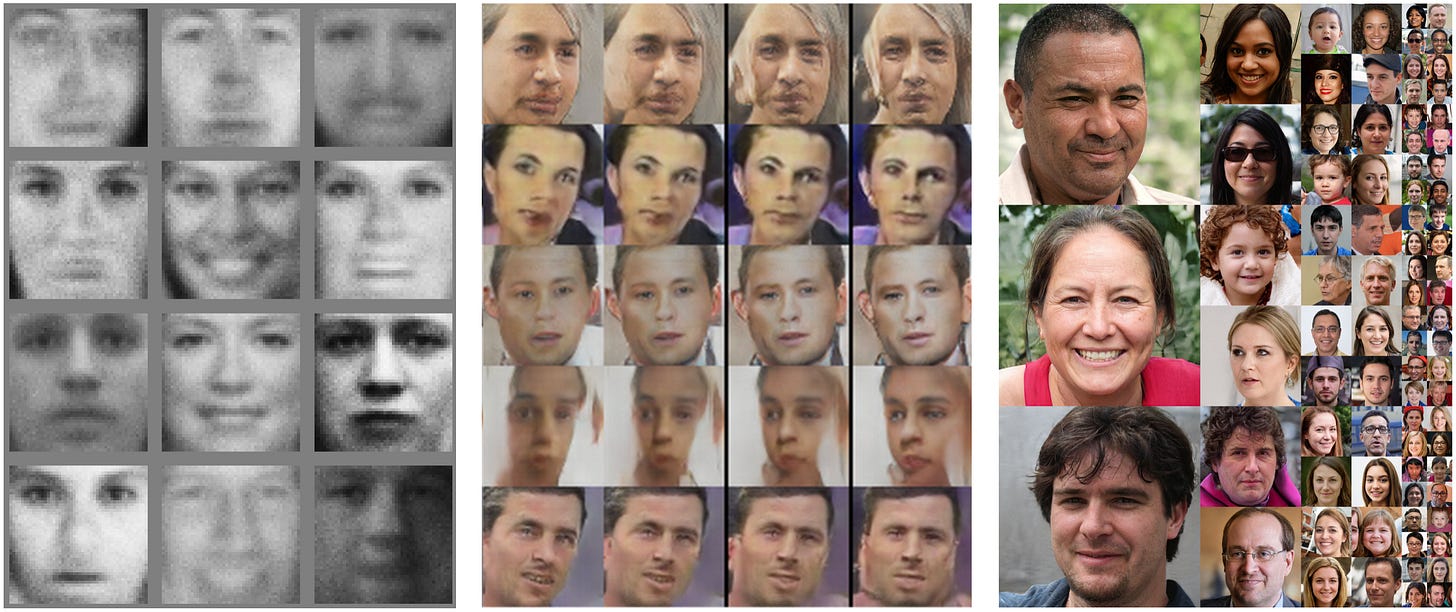

Over the past decade, AI-generated faces have come sharply into focus. The transition from the blurry faces made by the first generative adversarial networks to the choppy strangeness of faces made by deep convolutional GANs to StyleGAN and thispersondoesnotexist.com happened in five years. The other day, my sister sent me a picture of my daughter riding a unicorn down our street generated by nano banana.

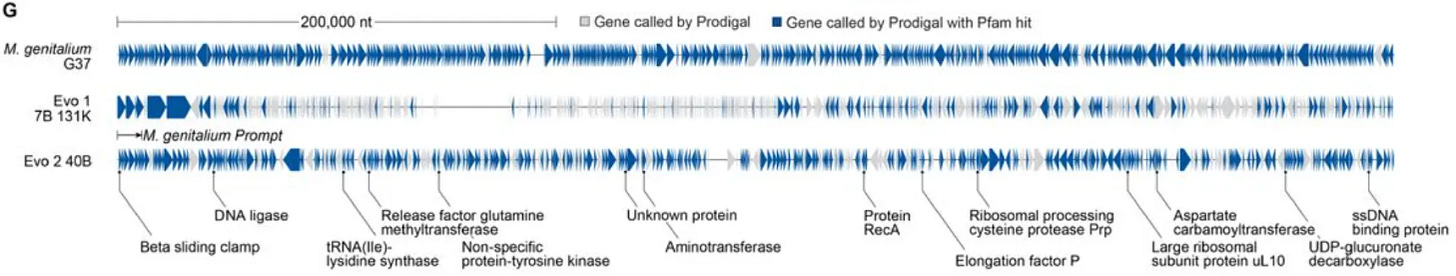

Last fall, the Arc Institute published Evo1, a biological foundation model trained on a database of 300 billion DNA nucleotides from bacterial genome sequences. They then prompted Evo1 to generate its own version of genome of the bacteria Mycoplasma genitalium, a sexually transmitted pathogen that also happens to have the smallest known genome of a free-living organism. This was the prompt:

|d__Bacteria;p__Tenericutes;c__Mollicutes;

o__Mycoplasmatales; f__Mycoplasmataceae;

g__Mycoplasma;s__Mycoplasma genitalium||The team evaluated the generated genomes with a range of computational tools to see how closely it resembled evolved genomes (unlike faces, we can’t just look at a genome sequence on a screen and see if it looks right). They concluded that while this generated M. genitalium genome resembled a genome in many ways,

These samples represent a “blurry image” of a genome that contains key characteristics but lacks the finer-grained details typical of natural genomes.

Like the blurry faces from the first GAN image generators in 2014, these genomes are too fuzzy and out of focus to be real, but it’s easy to imagine the trajectory of how they might improve.

Seven months ago, the Arc Institute introduced Evo2, a biological foundation model trained on 9.3 trillion base pairs of DNA from all domains of life. Using the first 10,000 letters of M. genitalium genome as a prompt, Evo2 could generate the remaining 570,000 letters. 70% of the genes generated looked enough like natural genes to be recognized by an automated gene annotation tool. The blurry image was coming into focus.

Last week the Arc team published a preprint reporting how they used Evo2 to generate the genome of ΦΧ174, a virus that can infect and kill E. coli. They fine tuned Evo2 with 15,000 Microviridae genomes, a family of tiny phages that infects gut bacteria. Prompting with just a handful of nucleotides from the start of the ΦΧ174 genome generated thousands of different full-length genomes that they could prune down to the ones that looked most virus-like.

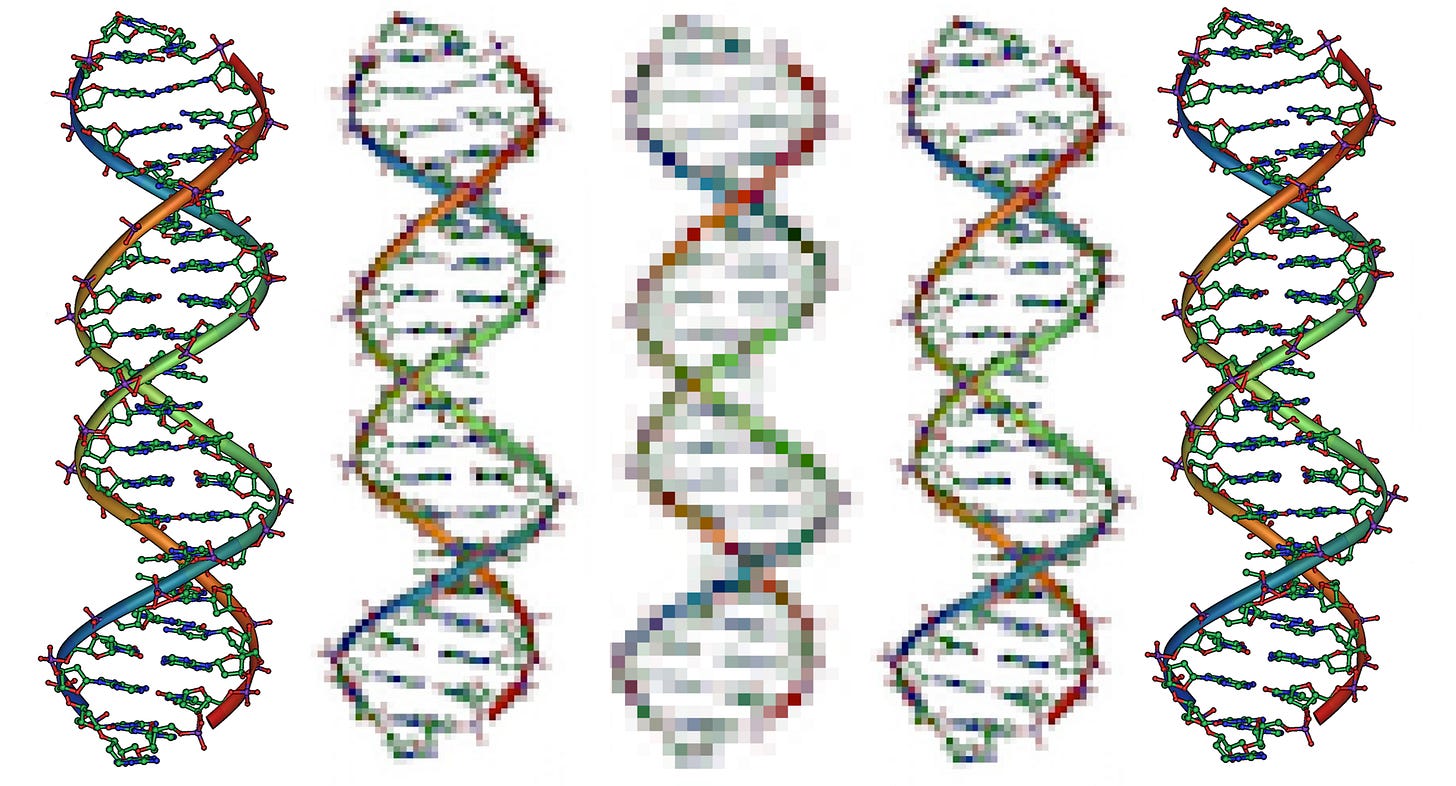

Digitally, these AI-designed genomes were getting much sharper —the architecture of their genes, the structure of the proteins the genes encoded, and other features of the genome look more and more like natural sequences. These genomes do not exist naturally, but unlike AI-generated faces, genomes can be taken out of the computer and brought to life in the real world.

From those thousands, the team chose 302 to synthesize and attempt to “reboot” into functional viruses. 285 of those could be synthesized and transformed into E. coli. Sixteen of those sequences, when read and translated by the E. coli produced functional viruses that killed their hosts. Three of those 16 were able to outcompete wild type ΦX174, and a cocktail of all 16 was able to quickly evolve to infect strains of E. coli that were resistant to natural ΦX174 infection. These genomes didn’t just look right, they worked.

Over the past five decades, bioengineers’ manipulations of this tiny phage genome have marked profound shifts in our ability to program biological “code”. ΦX174 was the first genome to ever be sequenced, in 1977, and the first genome to be synthesized, in 2003. In 2015, its genome was one of the first genomes to be redesigned and “decompressed” on a computer to make more logical sense for human engineers that need to understand how each gene works. Now it is the first functional genome to be designed by a computer.

In 2014, researchers at Autodesk, using CAD-like tools and about $1000 of online DNA synthesis orders built the ΦX174 genome in their lab, demonstrating just how easy virus design had become. Back then, I wrote that ΦX174 is an emblem of “our biological hopes and fears, and it will continue to symbolize our quest to sequence, synthesize, and design DNA.”

I was trained as a bioengineer during this era of “refactoring” and “CAD” for genome design from modular “parts”. DNA code is readable and writable, but it isn’t understandable enough to be programmed the way that we program computers. We can’t sit at a computer terminal and type out exactly what functions to run in what order to get a small icosahedral phage that infects E. coli. We can’t intuitively make sense of the overlapping code of genes in the phage, some read forward, some read backward.

To really program biology, the belief was that we had to be able to simplify. We needed to pull each piece out and make sense of them one at a time through detailed characterization so that we could manually reassemble these parts back into a new program that did what we wanted. For DNA to make sense as a program, to be manipulated on a computer, we had to reduce its resolution, pixelating the genome and losing some of the meaning in the code that we couldn’t yet translate.

Today, genome design is coming into focus, but not through the path that synthetic biologists expected during that era. Biological programming doesn’t look like brute-force “modeling every cog and gear…designing genomes piece by piece and troubleshooting each failure” as Niko writes in his great piece covering Arc’s new ΦX174 genome. Maybe true biological programming will look like vibe coding.

Functional data about how different sequences function in the real world isn’t a parts registry, but a training set. A biological function we can explain in English isn’t the outcome of a program but the start of a conversation. Design-build-test-learn starts to look like prompt engineering coupled to a lab that can compile the code.

As a biologist in love with the rich complexity of biology, I was never fully satisfied by the engineering vision of synthetic biology. The stripped down minimalism of a refactored gene circuit left me feeling cold and empty, nostalgic for something I couldn’t fully understand or name, like untranslatable words for feelings in other languages. We thought that to learn how to program biology we had to strip it down until we could understand it and control it. With enough data and compute, a different kind of biological design is coming into focus. What might be possible if we could vibe with biology instead?

Such a great article. Great summary of the historical context. So much interesting stuff happening in syn bio, and I agree with you that the very rational bioinformatics/computer science angle leaves something to be desired: the creative messiness and poetry of life. I like your idea of vibing biology. :)

Nice article! 🙌